Building Agentic workflows for code generation: Sharing the journey

Reading Time: 5 minutesAgentic workflows were just introduced, but they seem to evolve on a daily basis with new tools and architectures every day, as we learn to leverage them in real world applications. These are my insights from Anima’s ongoing journey to automate Front-end engineering with AI design to code solutions.

Website cloning and copyright issues

Reading Time: 2 minutesCloning sites isn’t about shortcuts—it’s about context. We share the thinking behind our new website cloning feature: how it helps teams prototype, modernize, and vibe code starting from real products, and the guardrails we’ve built to keep it ethical.

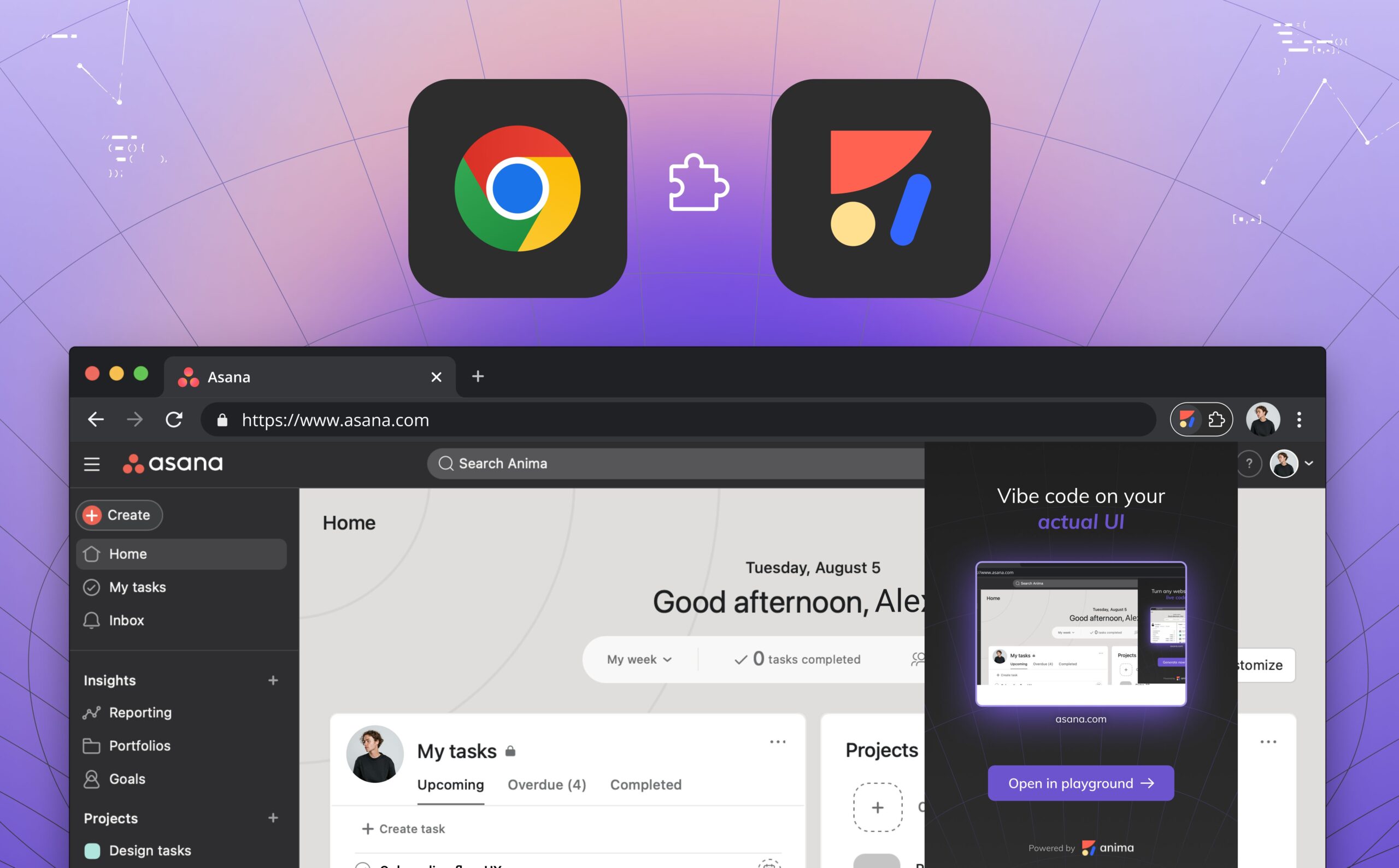

Clone and convert private sites / internal pages to React/HTML

Reading Time: 3 minutesConvert private or internal web pages into clean, editable React or HTML code with Anima’s on-brand vibe coding extension. Capture secure pages, keep enterprise-grade privacy, and instantly tweak layouts, colors, and content in Anima Playground.

Rise of the vibe designgineer

Reading Time: 3 minutesExplore the rise of vibe coding and the emerging role of the 'vibe designgineer'—how designers, PMs, and engineers are using AI tools to build functional products without writing code.

Anima API: Bringing Figma to coding AI agents

Reading Time: 4 minutesAnima API bridges Figma and coding AI agents, delivering pixel-perfect, production-ready code in React, HTML, and Tailwind. Power vibe coding platforms, prototypes, and automation tools with the same engine trusted by 1.5M Figma users.

11 Vibe Coding tips

Reading Time: 6 minutesLearn 11 practical vibe coding tips to avoid hitting walls when building with AI—from smart prompting and context management to saving progress and choosing the right tech stack.

How AI agents are reshaping design platforms: What designers and PMs need to know about AX

Reading Time: 3 minutesDiscover how AI agents are reshaping design platforms through Agent Experience (AX), enabling automation, collaboration, and innovation for designers and product managers.

Design and code – an ever evolving challenge

Reading Time: 6 minutesExplore the evolving challenges of bridging design and code as companies scale. Learn how design systems, Figma integrations, and AI tools like Anima's Frontier can address design drift, documentation gaps, and onboarding hurdles, creating a seamless collaboration between designers and developers.

Modernize Frontend in 2025: Future-proof your web and app development

Reading Time: 4 minutesDiscover essential strategies for modernizing your frontend in 2025. Learn how component-based architectures, design systems, edge rendering, microfrontends, and tools like Anima and Frontier by Anima can future-proof your web and app development, enhancing scalability, security, and performance.

Minimizing LLM latency in code generation

Reading Time: 2 minutesDiscover how Frontier optimizes front-end code generation with advanced LLM techniques. Explore our solutions for balancing speed and quality, handling code isolation, overcoming browser limitations, and implementing micro-caching for efficient performance.

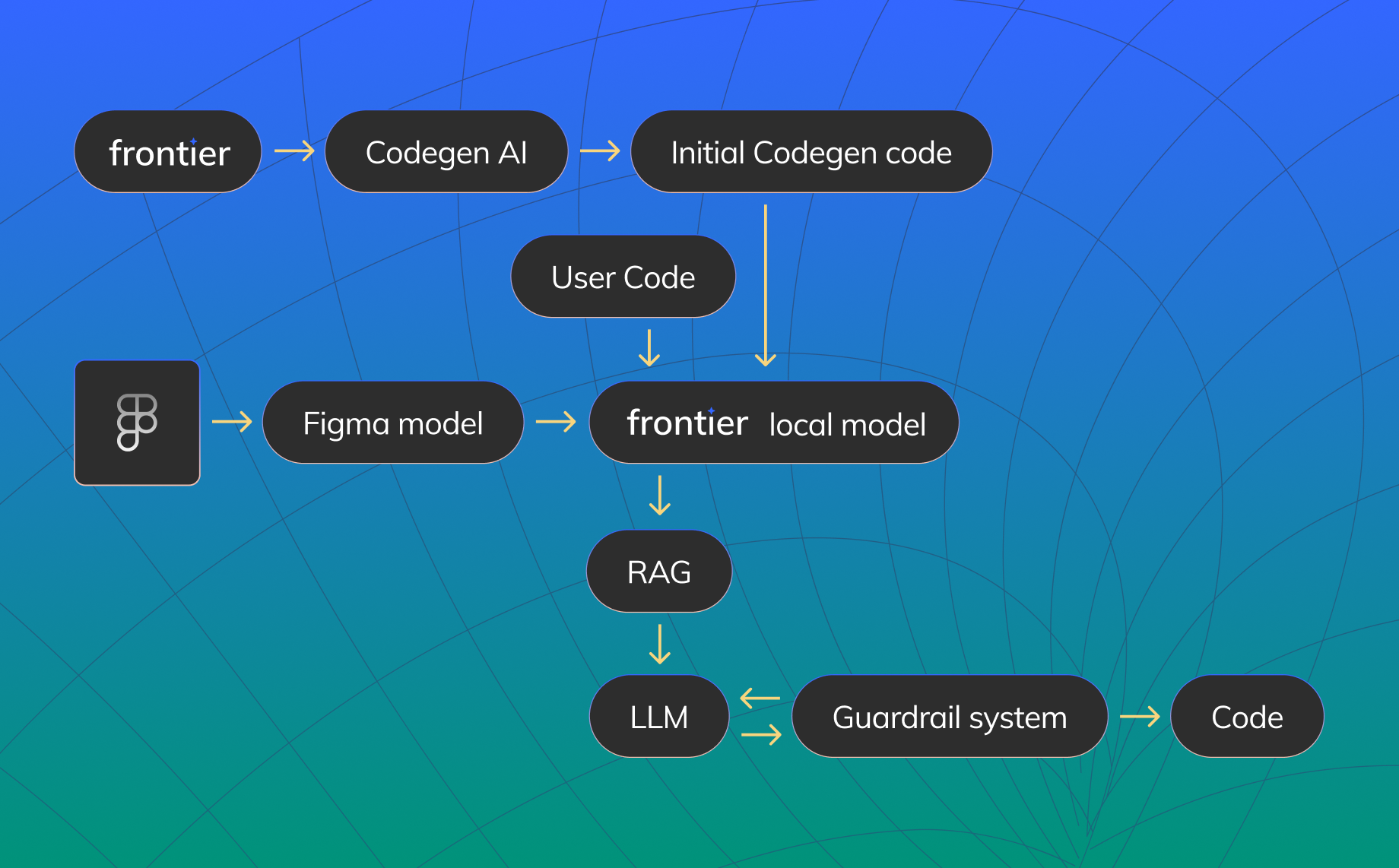

Guard rails for LLMs

Reading Time: 3 minutesThe conclusion is that you cannot ignore hallucinations. They are an inherent part of LLMs and require dedicated code to overcome. In our case, we provide the user with a way to provide even more context to the LLM, in which case we explicitly ask it to be more creative in its responses. This is an opt-in solution for users and often generates better placeholder code for components based on existing usage patterns.

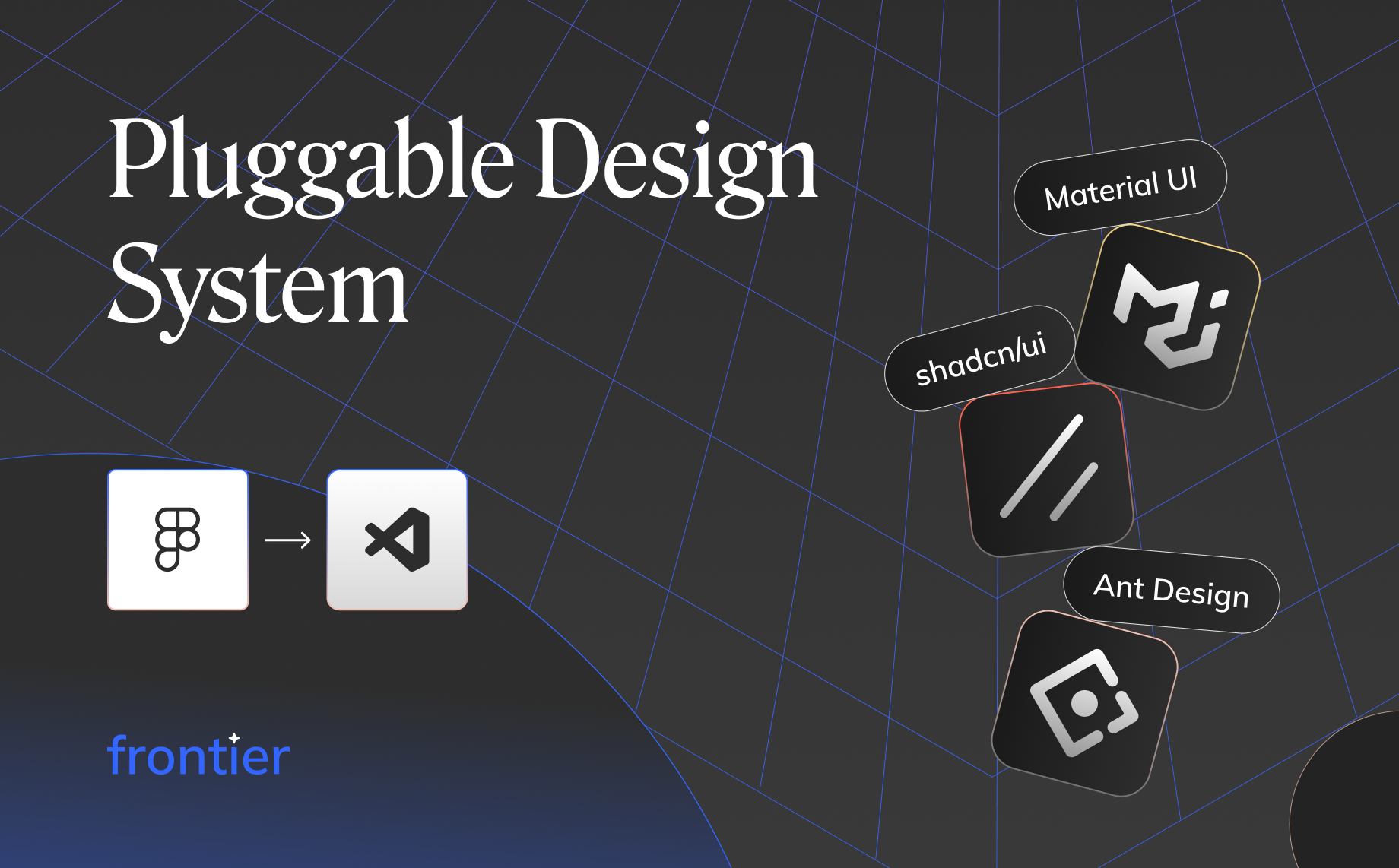

Pluggable design system – Figma to your design system code

Reading Time: 3 minutesWhen we created Frontier, we didn’t want to stick to just one coding design system. MUI, for example, is a very popular React Design System, but it’s one of <very> many design systems that are rising and falling. Ant Design is still extremely popular, as is the TailwindCSS library. We’re seeing the rapid rise of Radix based component libraries like ShadCN as are Chakra and NextUI.

Does Frontier support NextJS?

Reading Time: 2 minutesShort answer: Yes! Frontier will generate client components by default, when it detects NextJS. This is done by adding the ‘use-client’ statement at the beginning of the component declaration.

Generative code: how Frontier solves the LLM Security and Privacy issues

Reading Time: 3 minutesAI and LLM code generation typically suffer from Privacy and Security issues, particularly with Enterprise users. Frontier is a VSCode that generates code through LLMs, which uses local AI models in order to firewall the user's data and codebase from being exposed to the LLM. This unique approach isolates the codebase and ensures compliance and inter-developer cooperation without compromising the security of the code repo.

LLMs Don’t Get Front-end Code

Reading Time: 3 minutesOfer's piece delves into the evolving role of AI in front-end development, debunking myths about replacing human developers. Share your thoughts with us too!

Figma

Figma Adobe XD

Adobe XD Blog

Blog